Artificial intelligence utilization in recruitment has been intensively changing the manner in which organizations find, evaluate, and hire candidates for the last couple of years. Firms are progressively adopting AI-powered tools to streamline the hiring process, automate resume scanning, and identify top talent more efficiently.

However, as the global hiring market becomes increasingly data-driven, a significant issue has been raised about the presence of bias in AI algorithms. Although these systems bring about efficiency and are supposed to be fair, a great number of them have, in fact, unintentionally promoted the same kinds of discrimination that they were to help abolish. Is it possible for developers to really create fair hiring algorithms? The response to this question is intricate, and it starts with recognizing that bias originates when AI systems are created.

Understanding AI Bias in Recruitment

AI bias refers to situations in which algorithms lead to unfair or discriminatory results due to biased training data or a flawed model design. In hiring, this may be going on without anyone noticing, from a company getting more hires from some schools rather than others, to a system that will not show your CV if you are female, black, or a senior person. In general, machine learning models base their learning on the historical data they are given. If that data contains inequalities or hiring trends, then the AI Powered Solutions is going to reflect these biases and sometimes even amplify them. To illustrate, if the historical hiring data of a technology company indicates that most hires are male, an AI system trained on that data might be biased towards male candidates without being aware of it when it comes to engineering roles.

A famous instance was a major tech company whose AI recruitment tool was scrapped because it lowered the ranking of resumes containing the word “women’s,” e.g., “women’s chess club captain.” The algorithm developed this prejudice from a dataset full of male resumes.Such an instance explains why AI bias issues are not always about intent; rather, they are about the impact.

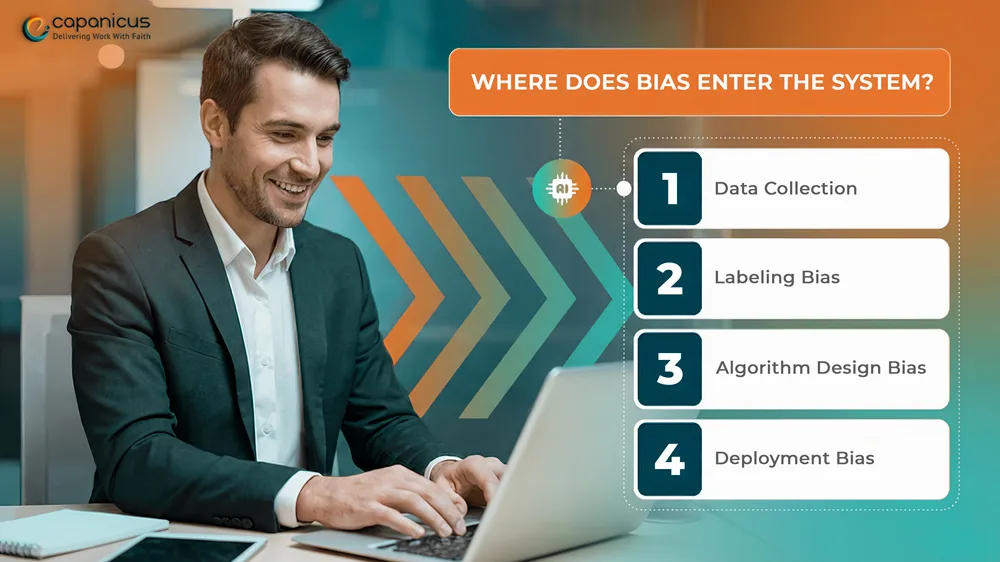

Where Does Bias Enter the System?

Bias in AI Powered Solutions can be traced to different stages of the creation process, and often, the developers are not aware of it, which is why there are chances of missing a spot sometimes. Knowing the sources of bias is crucial when evaluating the fairness of algorithms.

Data Collection: Bias Data is the base of any AI system. If the data used for training is not diverse or certain demographics are overrepresented, the AI will produce biased results. To illustrate, a data report that primarily consists of resumes from a single region or educational background can introduce regional or institutional bias.

Labeling Bias: Bias may be present in the decisions of human annotators who label the data, like marking resumes as “qualified” or “unqualified. based on their unconscious stereotypes, which can lead to the introduction of bias in the data.

Algorithm Design Bias: The developers decide which factors or metrics are most important for predicting success. If those factors are directly or indirectly related to the race, gender, or socio-economic status of a person, the algorithm may have concealed biases.

Deployment Bias: Even after training, bias can emerge when the AI system interacts with real-world hiring data. For instance, if companies use the AI tool in contexts it was not optimized for, errors and biases may multiply.

Why Bias Matters in Hiring

Biased AI systems may lead to negative consequences that are ethical, legal, and reputational in nature. Such a flawed algorithm might exclude qualified candidates by using irrelevant or discriminatory features without realizing it. Put companies in a position where they can be sued for breaking laws related to equal opportunities. Lose the trust of employees and the company’s commitment to diversity. Harm employer branding by giving the impression that there is no fairness in hiring practices. These effects extend beyond the harm to individuals and also push companies away from acquiring the diverse talents that are the basis of innovative and inclusive growth. For organizations that are implementing AI-powered solutions, fairness should not only be seen as a requirement for compliance but also as a strategic advantage.

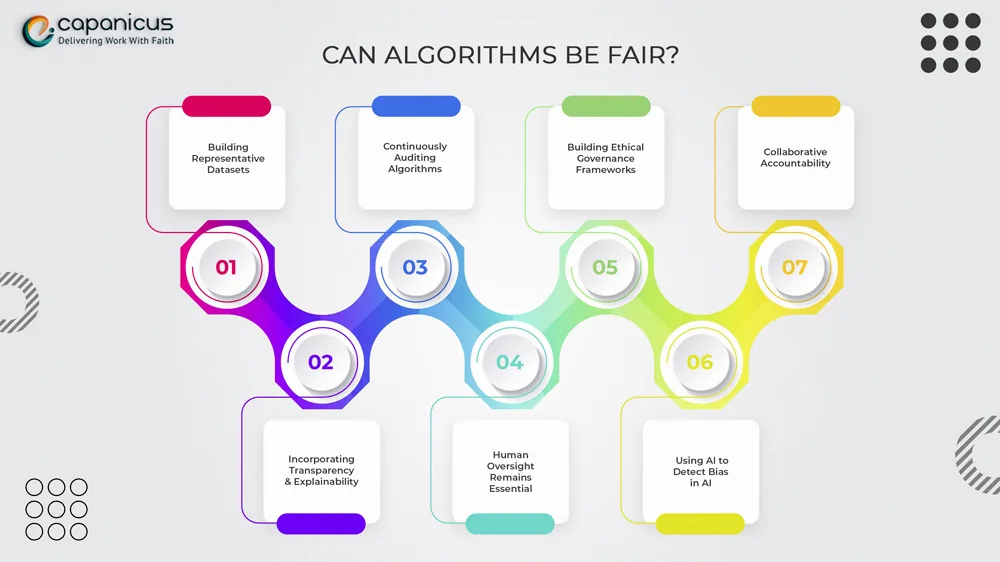

Can Algorithms Be Fair?

Absolute fairness may be difficult to achieve, but it is possible to design AI Powered Solutions that minimize bias and uphold ethical standards. Developers and organizations must focus on building “responsible AI,” a framework that prioritizes transparency, accountability, and inclusivity. Here are key strategies that can help.

- Building Representative Datasets

The best method for lowering bias is to have diverse and representative data from the very beginning. AI teams ought to verify that their datasets include different demographics, experience levels, and educational backgrounds. By including demographic equilibrium, algorithms can generalize more efficiently, and it is less likely that they will overfit specific groups. As an illustration, mixing worldwide hiring data instead of using datasets from a single area makes the use of recruitment fairer in multinational companies.

It is also very important for teams to perform regular data audits. Finding data holes, for instance, that minority candidates are underrepresented, enables developers to fix the imbalance before it spreads in the system.

2. Incorporating Transparency and Explainability

Quite a few AI-driven hiring systems are “black boxes” in that they make decisions that even the developers cannot explain. A lack of transparency of this kind is what makes the question of accountability arise. Explainable AI (XAI) helps in solving this problem by providing insights that are understandable by humans as to how the algorithms make their decisions. For example, an explainable recruiting instrument could reveal which attributes, like skills, experience, or certifications, were the ones that influenced the decision the most.

Being transparent not only creates a good relationship with the hiring managers by giving them confidence in the process but also allows for ethical monitoring. In the case that biases are present, the clarity of the model helps to identify and rectify the source more quickly.

- Continuously Auditing Algorithms

AI systems change as they take in new data. Their effectiveness and equity can vary without constant tracking. Continuous algorithmic audits serve to uncover any differences in the results between various groups of candidates. Audits should evaluate:

- How often are different genders, ethnicities, and age groups selected?

- Whether the predictions are equally accurate for all the demographic segments.

- If the recommendations are the same for candidates with the same level of qualifications.

By having bias identification mechanisms that flag unusual patterns without delay, one can be assured that the intervention will be timely, i.e., before the occurrence of another event, rather than managing the crisis after it has happened.

- Human Oversight Remains Essential

AI is a tool that should support decisions, not take over human judgment. In the case of hiring, the intervention of a human being is what guarantees that the final decisions take into account context, empathy, and characteristics of organizational culture, which no algorithm can replicate completely. Recruiters are able to employ AI in the accomplishment of monotonous tasks such as resume screening, whereas humans can holistically evaluate candidates on the shortlist. The collaboration between humans and machines is a way to keep both efficiency and fairness at work. Besides, companies need to train their hiring managers to be aware of the restrictions of AI-powered solutions. Being aware of it helps to keep a sense of responsibility when one interprets the outputs of AI.

- Building Ethical Governance Frameworks

Developers and employers must work together on the ethical AI frameworks, which indicate the manner in which fairness is measured, monitored, and enforced.

A strong governance strategy comprises:

- Thorough documentation of the creation and testing of the model.

- Ethical principles that comply with the regulations, such as GDPR and the local employment laws.

- Diversification of the voices, data scientists, HR professionals, and social scientists, whose input is considered during the development phase.

Such a multidisciplinary strategy guarantees that technical accuracy is in harmony with ethical obligation.

- Using AI to Detect Bias in AI

One of the ways that AI is limiting the damage caused by bias is that it can assist in the process of bias reduction itself. Bias that is built-in in the hiring data can be recognized by meta-learning algorithms, as well as by fairness testing frameworks, since they can do the analysis of the data automatically. As an illustration, bias-correction instruments are capable of modifying models in such a way that the rate of selection is equalized for different candidate groups. In other words, these breakthroughs reveal the potential of AI-driven technologies to transition from being the source of the problem to being the solution.

- Collaborative Accountability

It is not a developer-alone job to mitigate bias. Fair AI systems for hiring need the collaboration of different stakeholders:

- Developers: To maintain algorithmic integrity by ethical coding practices.

- HR leaders: To define fairness-driven goals for recruitment technology use.

- Compliance experts: To assess AI instruments concerning the integration of laws against discrimination.

- Policy-makers: To lay down the industry-wide standard of fairness.

The recruiting environment will be able to mature into fairness only when these teams cooperate and divide the task among themselves.

The Business Case for Fair AI

Using AI Powered Solutions ethically is not only a good thing to do from a moral perspective but also has business benefits. Companies with a diverse workforce have better performance in terms of innovation, customer satisfaction, and profitability than their competitors. Automated systems that unintentionally exclude diversity decrease the organization’s ability to compete. By committing to the development of transparent and fair AI-powered solutions, enterprises will be able to:

- Open access to a larger number of talent pools.

- Lower turnover rates by creating recruitment processes that reflect inclusion, which is the core value of hires.

- Increase brand reputation as fair employers.

- Make their staff the first in line to benefit from ethical technology adoption.

Justice becomes one of the distinguishing features in the recruitment of the most talented people and in gaining their confidence.

The Road Ahead

The discussion of AI bias in hiring is still very much happening. As regulations become more strict and technology gets better, developers have to create algorithms that not only achieve their purpose but are also socially responsible. What companies using AI-powered tools need to understand is that fairness should not be seen as a constraint but rather as an optimization goal. Ethical AI does not decelerate innovation; rather, it keeps it going.

Automation can make the hiring process more efficient, but fairness makes it valuable. Recruitment’s future is in the solutions that will harness the power of human empathy and machine accuracy. Developers have the means and the expertise to be at the forefront of this change. The question of whether fair algorithms can be created is not the question, but rather if organizations are willing to make such a commitment.

Conclusion

Artificial intelligence-driven solutions are changing how companies hire worldwide. However, even though automation brings efficiency, fairness is still the real way to gauge the level of improvement. Developers can create fair algorithms if they consider inclusion, transparency, and accountability not only in data collection but also in deployment. Companies should be willing to engage in continuous auditing, human supervision, and moral integrity.

Fairness is not something that can be built into an algorithm; rather, it is a collective belief. Besides, making sure that this value is at the core of AI-powered recruitment will be the next generation of responsible technology.